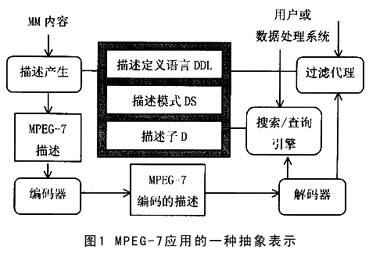

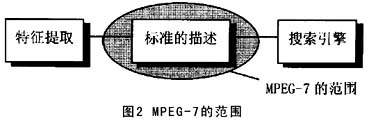

First, what is MPEG-7 This article refers to the address: http:// Now, we are faced with a distributed computing environment in which audiovisual information is created, exchanged, retrieved and reused in a distributed environment. Therefore, there is a need to develop a "representation form" of audiovisual information that goes beyond waveform-based or sample-based, compression-based (such as MPEG-1 and MPEG-2) or even object-based (such as MPEG-4) representations. This requires a "representation" that allows for some degree of annotation of the meaning of the information, while the device or computer code can pass and access those annotations. In October 1996, MPEG began a new work to provide solutions to the above problems. The new member of the MPEG family is known as the "Multimedia Content Description Interface", referred to as MPEG-7. The goal is to create a standard for describing multimedia content data that meets the needs of real-time, non-real-time, and push-pull applications. MPEG does not standardize applications, but it can be used to understand requirements and evaluate technology. It does not target specific application areas, but supports the widest possible range of applications. MPEG-7 will extend the existing solution and limited capabilities of existing identification content, including more multimedia data types. In other words, it will standardize a set of "descriptors" that describe various multimedia information, as well as standardize methods that define other descriptors and structures (called "description patterns"). These "descriptions" (including descriptors and description patterns) are associated with their content, allowing for quick and efficient searching of data of interest to the user. MPEG-7 will standardize a language to illustrate the description mode, which is "description of the definition language." AV material with MPEG-7 data can contain still images, graphics, 3D models, audio, speech, video, and information on how these elements are combined in multimedia presentation. Special cases of these generic data types can include facial expressions and personalization features. The functionality of MPEG-7 complements other MPEG standards. MPEG-1, MPEG-2, and MPEG-4 are representations of the content itself, while MPEG-7 is information about content, which is bits of bits. MPEG-3 used to exist, but since its HDTV target can be implemented with MPEG-2 tools, the work is terminated. Inferred in order, the next standard should be MPEG-5, but MPEG decided not to follow the logical order of the sequence, but instead chose the number 7. MPEG-5 and MPEG-6 are not currently defined. The MPEG-7 program officially became an international standard in September 2001. Second, the main concepts in MPEG-7 To better understand MPEG-7, we need to understand some of the concepts defined in MPEG-7: Data is audiovisual material described in MPEG-7, regardless of their storage, encoding, display, transmission, medium or technology. The definition is very broad and includes graphics, still images, video, movies, music, voice, sound, text and other related AV media. Feature refers to the characteristics of the data. The features themselves cannot be compared, but with meaningful features (descriptors) and their instances (descriptive values). Such as the color of the image, the tone of the voice, the melody of the audio, and so on. The descriptor (Descriptor, D) is a representation of the feature. It defines the syntax and semantics of the feature representation and can be assigned a description value. A feature may have multiple descriptors, such as possible descriptions of color features: color histogram, average of frequency components, field description of motion, title text, and so on. Descriptor Value is an instance of a descriptor. The description value is combined with the description pattern to form a description. The Description Scheme (DS) describes the structure and semantics of the relationships between its members. Members can be descriptors and description patterns. The difference between DS and D is that D only contains basic data types and does not reference other D or DS. For movies, time is structured into scenes and shots, and at the scene level, some text descriptors are included, including color, motion, and some audio descriptors at the lens level. Description A description consists of a description pattern (structure) and a set of description values. The Coded Description is a description of the completed code that satisfies the requirements such as compression efficiency, error recovery, and random access. The Description Definition Language (DDL) is a language that allows new description patterns and descriptors to be created, allowing extensions and modifications to existing description mechanisms. To better understand these terms, please refer to Figure 1. The figure explains the location of MPEG-7 in the actual system. The rounded box represents the processing tool, the rectangular box represents the static element, and the shaded part contains the specification elements of the MPEG-7 standard: DDL provides a mechanism for establishing a description pattern, and then uses the description pattern as a basis to generate a description. Note that the binary representation of the description is unnecessary and the text representation is sufficient. Third, the scope of MPEG-7 MPEG-7 is designed for applications in the form of storage (online, offline) or streaming (such as broadcast, push models on the Internet) and can operate in both real-time and non-real-time environments. A real-time environment means that when collecting data, the information is content-related. Figure 2 is a highly abstract schematic diagram of the MPEG-7 processing chain for explaining the scope of MPEG-7. It includes feature extraction (analysis), description itself, and search engine (application). In order to fully exploit the potential of MPEG-7 descriptions, automatic feature (or descriptor) extraction will be extremely useful. However, it is clear that automatic extraction is not always possible. The higher the level of abstraction, the more difficult it is to extract automatically. In this case, the interactive extraction tool can be used. But no matter how useful they are, whether they are automatic or semi-automatic, they are not included in the standard. The main reason is that there is no need to standardize them to establish interoperability, but to leave room for competition. Another reason is to allow for better improvements in the technical field. Search engines are also not included in the MPEG-7 range, it is not required, and competition will produce the best results. Like other members of the MPEG family, MPEG-7 is the standard representation of audiovisual information that meets specific needs. MPEG-7 is based on other standard representations such as PCM, MPEG-1, MPEG-2 and MPEG-4. Therefore, MPEG-7 will refer to some existing standards, such as the shape descriptor in MPEG-4 can also be used for MPEG-7. Similarly, motion vectors in MPEG-1 and MPEG-2 can also be utilized. However, the MPEG-7 descriptor will not depend on the encoding and storage of the content being described. The description of MPEG-7 can be attached to a simulated movie or to a picture printed on paper. Even though the MPEG-7 description does not rely on the encoded representation of the material, to some extent the standard is built on top of MPEG-4. MPEG-4 provides a method of object-wise encoding of audiovisual materials with a certain amount of time (synchronization) and spatial (spatial location and three-dimensional perception) relationships. With MPEG-4 encoding, it will be possible to attach a description to an element (object) in the scene. MPEG-7 can be used independently of other MPEG standards, and the representation defined in MPEG-4 is also very suitable for the establishment of the MPEG-7 standard. MPEG-7 emphasizes the provision of new audiovisual content description solutions. Therefore, text is not a description of MPEG-7, but audiovisual content can contain or reference text. Therefore, MPEG-7 will consider text processing schemes developed by other existing standards organizations and support these standards as appropriate. Fourth, how to describe the content of multimedia data 1. Description principle In MPEG-7, a comprehensive description principle is considered. (1) Multiple and hierarchical descriptions Since the description features have corresponding meanings in the respective applications, the description features will be different for different user domains and different applications. This means that the same material can be described using different types of features to suit the specific application area. This involves the issue of multiple descriptions and hierarchical descriptions. In terms of description, MPEG-7 allows for different granularity, providing different levels of authentication. Multiple descriptions are the ability to support multiple descriptions of the same material at various stages of the multimedia data generation process, and can be attached to multiple copies of the same material. The hierarchical description is based on the level of abstraction of the multimedia material. Hierarchical mechanisms can allow multimedia content to be described at different levels of abstraction. The level of abstraction is related to the way features are extracted. Many low-level features can be extracted automatically, while advanced features require more human interaction. In addition, the media data can be described from different application requirements, for example, from the perspective of the sub-object, the sequence angle of the motion analysis, and the plot structure of the video. The description mode supports hierarchical representation of different descriptors, and the Nth layer description is an enhancement, refinement or supplement of the N-1 layer description, so that the query can be efficiently processed layer by layer to support efficient query. (2) Relationship description The description mode is used to express various relationships between descriptors, allowing descriptors to be used in multiple description modes. The MPEG-7 support descriptors are associated with different time ranges and may be hierarchical, ie the descriptors are associated with the entire data and a partial temporal subset; or sequential, the descriptors are associated with the time segment order. (3) Support for query Supports audio, visual or other descriptors in cross-mode queries, such as allowing visual description-based queries to retrieve audio data or vice versa. The description mode supports the priority of the descriptors in order to process the queries more efficiently, while the priorities can reflect trust or reliability levels. In addition, you can also support descriptors as handles, directly reference data, and manipulate multimedia materials. 2. Multimedia feature type MPEG-7 supports various types of multimedia features such as: For the space type, the N-dimensional space-time feature is the texture and shape of the object from the microscopic point of view. From the macroscopic point of view, it is the spatial relationship and performance space of the object; the time type refers to the trajectory of the object changing with time, such as the music segment. Duration, etc. The objective characteristics reflect the characteristics of the audiovisual data itself. Such as the color, shape, texture, audio frequency, etc. of the object. Subjective characteristics of subjective perception of audiovisual data. Such as the description of emotions (happy, angry) and style. Product features such as record author, producer, director and other information. The composite information includes scene composition, editing information, user preferences, and the like. Concepts are used to describe concepts such as events and activities. In many cases, it is desirable to utilize textual information as a description. It must be noted, however, that the useful description is as independent as possible from the linguistic category, using a clear description, such as the text form of the author, film, and place name. In addition to the description of the content, you need to include other types of information about multimedia data: Forms such as encoding mode, data size. This information helps determine if the material is "readable" to the user. The conditions for accessing the data may include copyright, license and authorization information, and price data. A category can contain a parent level that is used to fall into a predetermined class. Links to other related materials support the association of other information with the data. Where the contextual information is recorded, such as the time and place described in the "1996 Olympic Men's 200m Obstacle Final". Interaction activities support interactive activity tools that allow for the description of interactions related to the description. Such as interactions in remote shopping related to advertising. MPEG-7 data can be physically associated with the associated AV material or in the same data stream or in the same storage system, but the description can be placed anywhere else in the world. When the content and its description are not in one place, the AV material and their MPEG-7 description mechanism need to be linked. These chains should be bidirectional. 3. Description of visual data MPEG-7 specifically has the following requirements for the visual description and description modes: (1) Feature type Visual descriptions allow the following features (related to the type of information used in the query): color, visual object, texture, outline (sketch), shape, still and dynamic image, volume, spatial relationship (relative to object space in images and image sequences) And topological relations, which are spatial synthesis relations), motion (such as motion in video shots, used to retrieve information using temporal synthesis information), deformation (such as bending of objects), source of visual objects, and its characteristics ( Such as source objects, source events, source properties, events, event properties, etc.), models (such as MPEG-4 SNHC). (2) Data visualization using descriptions The MPEG-7 data description should allow for more or less coarse visualization of the indexed data. (3) Format of visual data Supports the following visual data formats: digital video and movies (eg MPEG-1/2/4), analog video and movies, static images in electronic form (eg JPEG) or images on paper, graphics, 3D models and associated with video Edit data. (4) Visual data type Can be natural video, still images, graphics, animation, 3D models, editing information. 4. Description of auditory data Similarly, the description and description modes of audio data have the following requirements: (1) Feature type Frequency contours, audio objects, timbre, harmony, frequency characteristics, amplitude envelope, time structure (including rhythm), text content (speech or lyrics), sound wave approximation (by humming a melody or emitting a sound effect to generate ), prototype sound (typical for example queries), spatial structure (for multi-channel sound sources such as stereo, 5.1 channels, etc., each channel has a specific image), sound source and its characteristics (such as source objects) , source time, source attributes, events, event attributes, and typical associated scenes), models (such as MPEG-4 SAOL). (2) Describe the auditory data Similar to the requirements of visual data. (3) Hearing data format Digital audio (such as MPEG-1 audio, CD), analog audio (such as tape media), MIDI (including general MIDI and Karaoke formats), model-based audio, product data. (4) Hearing data class Sound track (natural audio scene), music, atomic sound effects (such as applause), voice, symbolic audio representation (MIDI, SNHC audio), mix information (including effects). V. Multimedia Research and MPEG-7 MPEG-7 will standardize the description of various types of multimedia information, but it does not include the extraction of descriptors/features, nor does it regulate search engines and other programs that use these descriptions. Therefore, around MPEG-7, in terms of multimedia information access, the following work can be further carried out: Access interface Research common and application-related multimedia information query interfaces. For example, playing a few notes on the keyboard to query the music, using the "squeaky brake sound" to find the scene chased by the car; drawing some line segments on the screen to obtain a set of images containing similar graphics, signs and symbols; defining the object Colors and textures, obtaining images similar to the features you choose; for a given set of objects, describing the relationship between motion and objects, obtaining a set of dynamic images containing the spatio-temporal relationship of the description; given the content, Describe the plot and get a scene of multiple similar episodes, and so on. Other issues worth considering are: how to combine these queries; how to introduce interactions and priorities in the query process; how to design a query language; how to construct a browse and visual view to create a more efficient and reliable multimedia information access interface . 2. Feature extraction and retrieval engine Automatic and semi-automatic feature extraction methods. This is very valuable for large-scale multimedia data management. The feature extraction and retrieval engine are discussed together because the research of the two is closely related in terms of retrieval efficiency and effectiveness, that is, the retrieval effectiveness makes the user and the system get the lookup instead of the others; the retrieval efficiency makes The user or system quickly gets the desired result. This requires researchers to explore features that are more representative of media data content (easy to distinguish), as well as efficient index structures and algorithms. 3. Extensive multimedia application research MPEG-7 is not only used for the retrieval of multimedia information, but also widely used in other fields related to multimedia content management. Many applications and application areas will benefit from the MPEG-7 standard. In the application recommended by MPEG-7, there are actually many topics worth studying. RAM/RFM electric heating capacitors RAM/RFM electric heating capacitors RAM/RFM electric heating capacitors YANGZHOU POSITIONING TECH CO., LTD , https://www.yzpstcc.com