What programs/scripts have you written in Python?

Some netizens asked questions on Quora, "What is the most powerful program/script you have written in Python?" This article is an excerpt from several small projects of three foreign programmers, including code.

Manoj Memana Jayakumar, 3000+ top

Update: With these scripts, I found a job! See my reply in this post, "Has anyone got a job through Quora? Or somehow made lots of money through Quora?"

1. Movie/TV subtitles one-click downloader

We often encounter such a situation, which is to open the subtitle website subscene or opensubtitles, search for the name of the movie or TV series, then select the correct crawler, download the subtitle file, extract, cut and paste it into the folder where the movie is located, and the subtitle file needs to be renamed to match the name of the movie file. Is it too boring? By the way, I wrote a script to download the correct movie or TV subtitle file and store it in the same location as the movie file. All the steps can be completed with just one click. Is it forced?

Please watch this YouTube video: https://youtu.be/Q5YWEqgw9X8

The source code is stored in GitHub: subtitle-downloader

Update: Currently, the script supports simultaneous download of multiple subtitle files. Steps: Hold down Ctrl and select the multiple files for which you want to download subtitles. Finally, execute the script.

2. IMDb Query / Spreadsheet Generator

I am a movie fan and like to watch movies. I always get confused about which movie to watch because I have collected a lot of movies. So, what should I do to eliminate this confusion and choose a movie to watch tonight? That's right, it's IMDb. I open http://imdb.com, enter the name of the movie, watch the rankings, read and comment, and find a movie worth watching.

However, I have too many movies. Who would want to enter the names of all the movies in the search box? I certainly won't do this, especially if I believe "if something is repetitive, then it should be automated." So I wrote a python script to get the data using the unofficial IMDb API. I select a movie file (folder), right click, select 'Send to', then click IMDB.cmd (by the way, IMDB.cmd is the python script I wrote), and that's it.

My browser will open the exact page of this movie on the IMDb website.

The above operation can be done with just one click of a button. If you can't understand how cool this script is and how much time it can save you, check out this YouTube video: https://youtu.be/JANNcimQGyk

From now on, you no longer need to open your browser, wait for the IMDb page to load, and type the name of the movie. This script will do all the work for you. As usual, the source code is placed on GitHub: imdb with instructions. Of course, since this script must remove meaningless characters in files or folders, such as "DVDRip, YIFY, BRrip", etc., there will be a certain percentage of errors when running the script. But after testing, this script works fine on almost all my movie files.

2014-04-01 update:

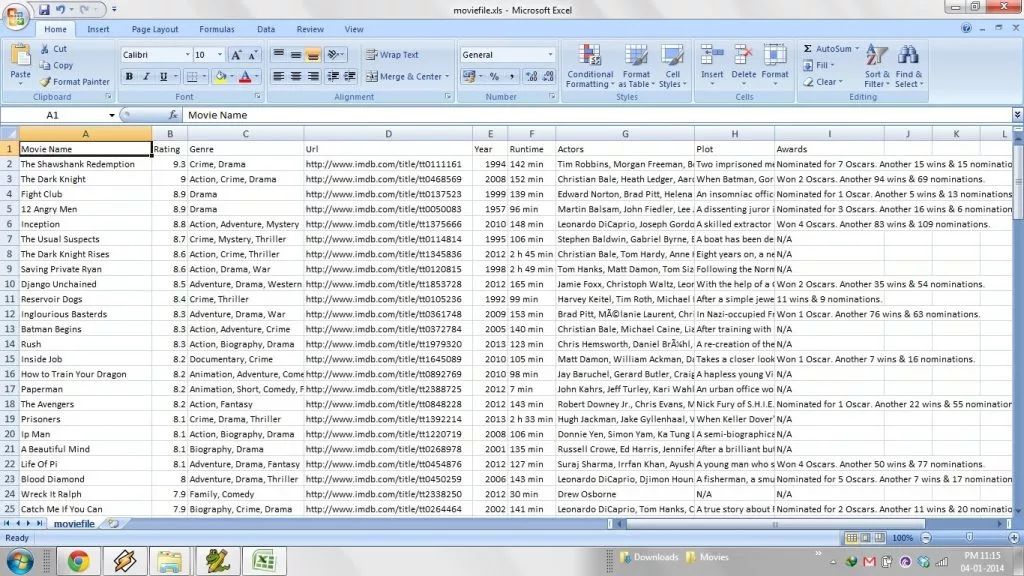

Many people are asking if I can write a script that can find the details of all the movies in a folder, because it is very troublesome to find the details of one movie at a time. I have updated this script to support processing the entire folder. The script analyzes all the subfolders in this folder, grabs the details of all the movies from IMDb, and then opens a spreadsheet that sorts all the movies in descending order, according to the ranking on IMDb. This table contains (all movies) in IMDb URL, year, plot, category, award information, cast information, and other information you may find at IMBb. The following is an example of a table generated after the script is executed:

Your very own personal IMDb database! What more can a movie buff ask for? ;)

Source on GitHub: imdb

You can also have a personal IMDb database! Can a movie fan still ask for more? :)

Source code in GitHub: imdb

3. theoatmeal.com serial comic downloader

I personally like Matthew Inman's comics. They are crazy and funny, but they are thought-provoking. However, I am tired of repeatedly clicking on the next one before I can read every comic. In addition, since each comic is composed of Dover images, it is very difficult to manually download these comics.

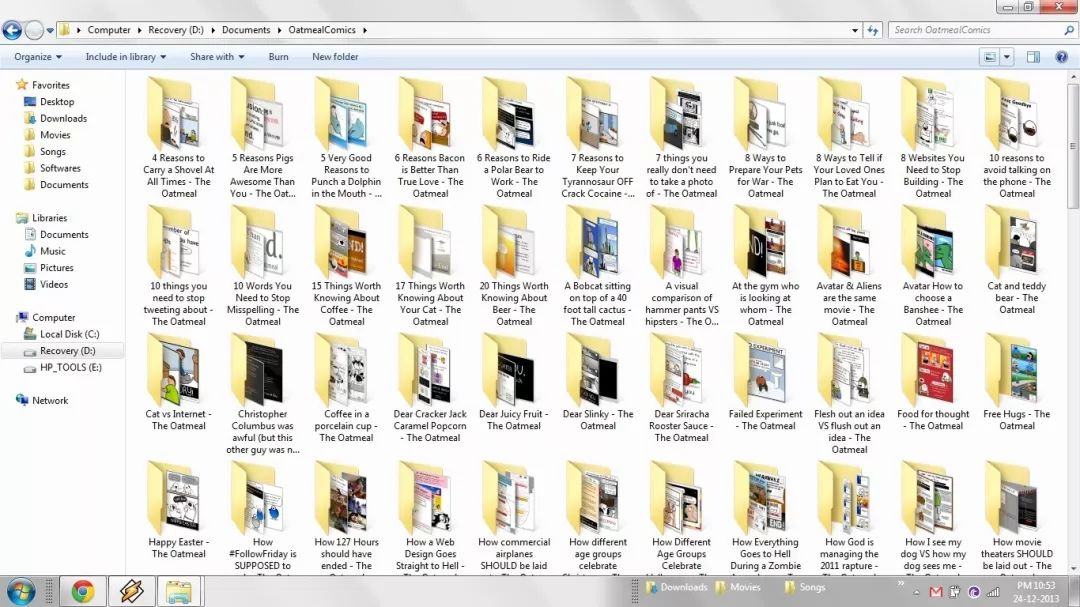

For the above reasons, I wrote a python script to download all the comics from this site. This script uses BeautifulSoup (http://B...) to parse HTML data, so you must install BeautifulSoup before running the script. The downloader for downloading oatmeal (a comic book by Matthew Ingman) has been uploaded to GitHub: theoatmeal.com-downloader. (Manga) After downloading the folder is like this:

4. someecards.com Downloader

After successfully downloading the entire comic from http://, I was wondering if I could do the same thing, from another site I liked - funny, the only http:// download something?

The problem with somececards is that the image naming is completely random, all images are not in a specific order, and there are a total of 52 large categories, each with thousands of images.

I know that if my script is multi-threaded, it would be perfect because there is a lot of data to parse and download, so I assign a thread to each page in each category. This script will download funny e-cards from each individual category of the site and put each one in a separate folder. Now, I have the most private e-card collection on the planet. After the download is complete, my folder looks like this:

That's right, my private collection includes: 52 categories, 5036 e-cards. The source code is here: someecards.com-downloader

EDIT: A lot of people ask me if I can share all the files I downloaded (here, I want to say). Because my network is not stable, I can't upload my favorites to the network hard drive, but I have uploaded a seed file. You can download it here: somecards.com Site Rip torrent

Plant seeds, spread love :)

Akshit Khurana, 4400+ top

Thanks to more than 500 friends for sending me birthday wishes on Facebook.

There are three stories that make my 21st birthday unforgettable. This is the last story. I tend to personally comment on each blessing, but using python to do better.

# Thanks everyone who wished me on my birthday import requests import json # Aman's post time AFTER = 1353233754 TOKEN = ' ' def get_posts(): """Returns dictionary of id, first names of people who posted on my wall between start and end time""" Query = ("SELECT post_id, actor_id, message FROM stream WHERE " "filter_key = 'others' AND source_id = me() AND " "created_time > 1353233754 LIMIT 200") payload = {'q': query, 'access_token': TOKEN} r = requests.get('https://graph.facebook.com/fql', params=payload) result = json.loads(r.text) return result['data'] def commentall(wallposts): """Comments thank you on all posts""" #TODO convert to batch request later for wallpost in wallposts: r = requests.get('https://graph.facebook.com/%s' % wallpost['actor_id']) Url = 'https://graph.facebook.com/%s/comments' % wallpost['post_id'] user = json.loads(r.text) message = 'Thanks %s :)' % user['first_name'] payload = {'access_token': TOKEN, 'messa Ge': message} s = requests.post(url, data=payload) print "Wall post %s done" % wallpost['post_id'] if __name__ == '__main__': commentall(get_posts())

In order to run the script smoothly, you need to get the token from the Graph API Explorer (with appropriate permissions). This script assumes that all posts after a specific timestamp are birthday wishes.

Despite a little change to the comment feature, I still like every post.

When my clicks, comments, and comment structures pop up in ticker (a feature of Facebook, friends can see what another friend is doing, such as likes, listen to songs, watch movies, etc.), one of my Friends soon discovered that this matter must be flawed.

Although this is not my most satisfying script, it is simple, fast and fun.

When I was discussing with Sandesh Agrawal in the web lab, I had the idea to write this script. To this end, Sandesh Agrawal expressed his deep gratitude for the delay in laboratory work.

Tanmay Kulshrestha, 3300+ top

Ok, before I lost this project (a pig-like friend formatted my hard drive, all my code was on that hard drive) or, before I forgot the code, I decided to answer the question.

Organize photos

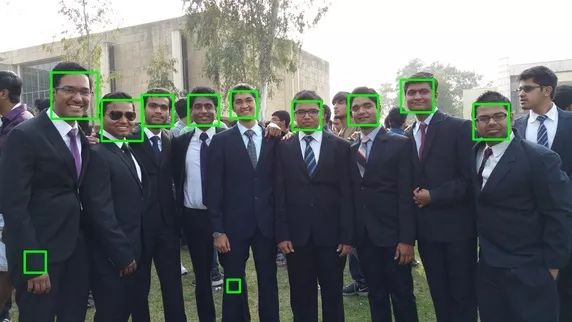

After I was interested in image processing, I was always working on machine learning. I wrote this interesting script in order to categorize the images, much like what Facebook does (of course this is an algorithm that is not precise enough). I used OpenCV's face detection algorithm, "haarcascade_frontalface_default.xml", which detects faces from a single photo.

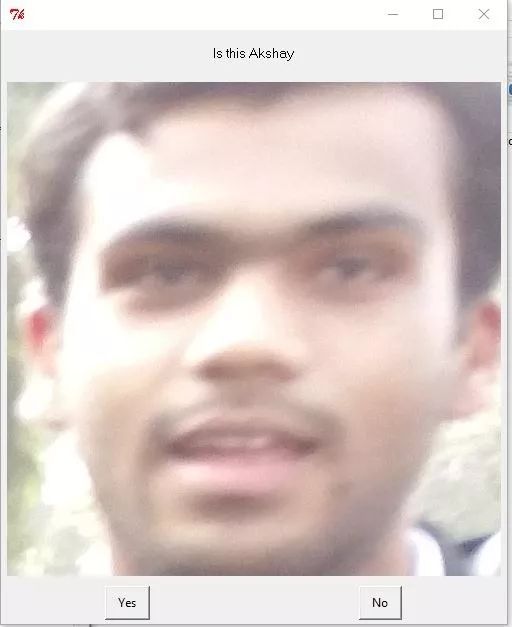

You may have noticed that some parts of this photo were mistakenly recognized as faces. I tried to fix some parameters (to fix this problem), but some places were mistakenly recognized as faces, which is caused by the relative distance of the camera. I will solve this problem in the next stage (training steps).

This training algorithm requires some training material, and each person needs at least 100-120 training materials (of course, more good). I was too lazy to pick photos for everyone and copy them to the training folder. So, as you may have guessed, this script will open a picture, recognize the face, and display each face (the script will predict each face based on the training material at the current node). With each photo you tag, Recognizer will be updated and will include the last training material. You can add new names during the training process. I made a GUI using the python library tkinter. Therefore, most of the time, you have to initialize a small part of the photo (name the face in the photo), and other work can be given to the training algorithm. So I trained Recognizer and then let it (Recognizer) handle all the images.

I use the name of the person contained in the image to name the image (for example: Tanmay&*****&*****). Therefore, I can traverse the entire folder and then search for the image by entering the name of the person.

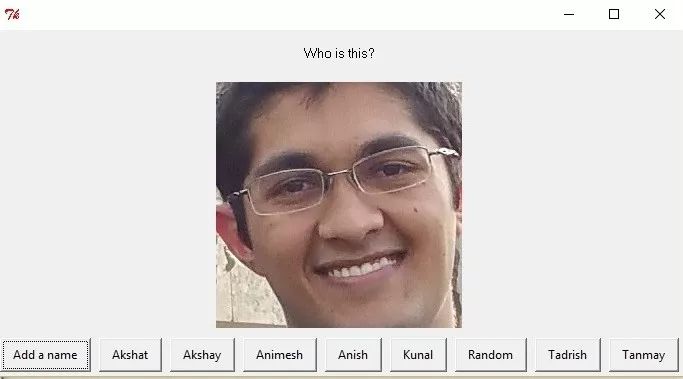

In the initial state, when a face has no training material (the name of the face is not included in the material library), you need to ask his/her name.

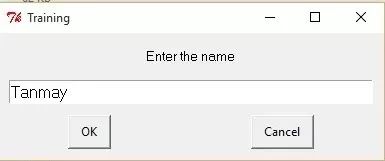

I can add a name like this:

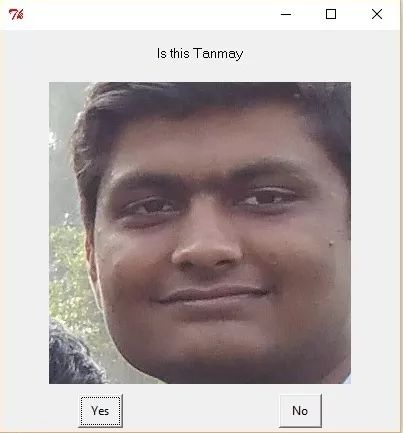

When you train a few pieces of material, it will look like this:

The last one is a workaround for dealing with spam random blocks.

The final folder with the name.

So now looking for pictures has become quite simple. By the way, I am sorry (I) to enlarge these photos.

The Friction Disc is a kind of Printer Accessories.

The cartridge is irradiated by laser beam to adsorb toner, and then the toner is hot pressed by fixing roller for printing. In this process, there will be part of toner residual, which can not be "granules returned to the warehouse"; Automatic cleaning function is not adsorbed new toner particles and directly print, will remain toner away, fully ensure the next printing effect. And the Plate-Grid plays an important role. When high voltage generator to a high voltage electrode, wire electrode with reseau formed between a strong electric field, and release the corona, wire electrode and the photosensitive drum ionizes the air between the air ions migrate to the drum surface, make the photoconductor (drum) surface is full of charge, so can spare toner "adsorption to warehouse", so as to save toner, The purpose of reducing environmental pollution.

High Accuracy Friction Disc,Semi-Etching Surface Friction Disc,Drive Shaft Parts ,Printer Friction Disc

SHAOXING HUALI ELECTRONICS CO., LTD. , https://www.cnsxhuali.com