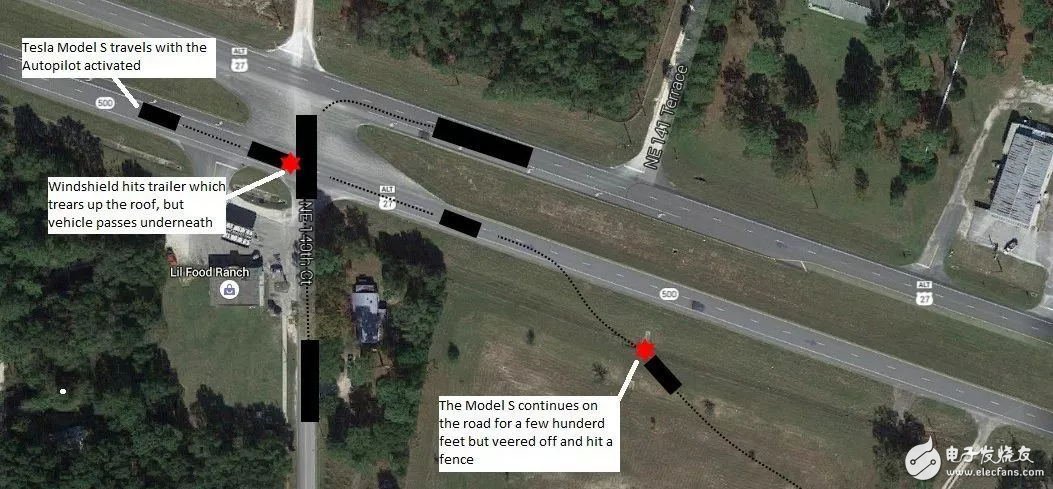

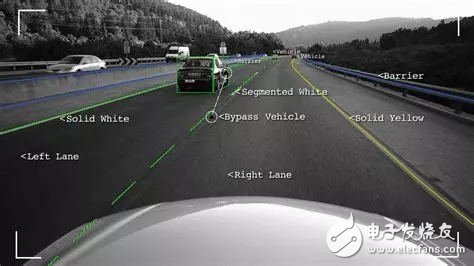

As one of the representative models equipped with Autopilot (Automatic Assisted Driving) system, Tesla can be said to be a benchmark in the eyes of the industry and in the eyes of consumers. At every product launch, Tesla CEO Elon Musk also emphasized from beginning to end: Autonomous vehicles will play a vital role in improving traffic safety and accelerating the future of the world towards sustainable energy development. Compared with manual driving, Tesla's fully-automatic driving will fundamentally improve the safety of the vehicle, provide lower transportation costs for the owner, and provide an "on-demand" economy for consumers who do not own the vehicle. Travel mode. This has led many consumers to believe that as long as the Autopilot system is turned on, my Tesla can enter the fully automatic driving mode as in the movie. However, reality is not as good as imagined. In a tragic accident, Tesla, who started the Autopilot system, hit the sweeper, trailer, concrete pier, fire truck in front... In this regard, Tesla gave the explanation that the driver did not put his hands on the steering wheel when he turned on the Autopilot system to respond to emergencies at any time. This blames the driver on the responsibility. And the translation of Autopilot in China changed from "automatic driving" to "automatic assisted driving". Musk also said at the press conference: It's autopilot not autonomous. (Autopilot is not automatic driving, but automatic assisted driving). However, many accidents have fully proved that the current system does not recognize and react to 100% static obstacles, so it cannot be called automatic driving. However, Tesla equipped with the Autopilot system, which was almost deified, why can't even avoid the obstacles visible hundreds of meters away? Today we also invite several senior people from the smart driving industry to share their views. Some of them are inconvenient to disclose their identity because of their interests. It is precisely because of this that we can really answer the inner problems of consumers. Before the text begins, let's answer a question: If you choose to "brake when you don't need a brake" and choose "do not brake when you need to brake", how would you choose? I believe that more than 90% of people will choose the former, because the latter will happen or will be fatal. But the actual result is exactly the opposite. For engineers in the Autopilot field, the latter will be chosen without exception. Such a setting that sounds extremely dangerous is what the engineers deliberately did. Why? In terms of current autonomous driving technology, it is not yet possible to achieve full automatic driving. Even though the hardware content of the hardware is very high, many experimental simulations have been done in the early stage. However, in the actual road environment, there are still many situations in which the system cannot judge. Generally, when this happens, the system has only two choices: "False PosiTIve" and "False NegaTIve". That is to say, when the system cannot clearly determine whether there is an obstacle in front, it should be immediately braked just in case, that is, a false alarm. Still should ignore this uncertain danger, that is, underreporting. In the eyes of many people, the system should be set to a tendency of false positives. In the spirit of “Ning is not credible, it does not believe itâ€, the accident can be avoided to the greatest extent. But the fact is not like this. Imagine: On the highway, you turned on Autopilot, and behind the car was approaching at high speed, and the front was wide open. At this time, a large steel plate appeared on the road ahead. The radar determined that this was a huge obstacle, so the emergency brake was taken, and the rear car could not respond... The only safe solution is not to move. Especially for millimeter wave radars, it is sensitive to metal reflections. The steel plate on the road, the raised manhole cover, and even the bottom of the can, are equivalent to a wall in the eyes of a millimeter wave radar. For such an overly complex actual road situation, the poor driving experience caused by the inexplicable braking of the vehicle does not mean that the risk factor will be greater. Therefore, engineers will adopt the logic of “missing†to improve the driving experience and avoid the safety hazards caused by excessive braking behavior. However, once the system has missed any of the dangers that are indeed present, it is very likely to cause a major disaster, which is why the current brand officials are emphasizing that “the hands should not leave the steering wheel when the Autopilot function is turned onâ€. In order to be ready at all times, the driver takes over the vehicle. And Tesla also clearly stated in the user manual: Traffic-aware cruise control systems may not brake or slow down to avoid stationary vehicles, especially in this case: you are driving at speeds of more than 80 kilometers per hour, after you have changed lanes in front of you, suddenly in front of you A stationary vehicle or object appears. Drivers should always pay attention to the road ahead and be ready to take urgent corrective measures. Total reliance on traffic-aware cruise control systems can lead to serious casualties. Going back to the very beginning question: Why do Tesla, who claims that “all vehicles in production are able to drive completely automaticallyâ€, can’t even avoid obstacles that are visible hundreds of meters away? We can analyze a few well-known accidents in Tesla. In May 2016, a Tesla ModelS electric vehicle collided with a turning trailer while driving in the Autopilot mode, causing the driver to die. For this accident, Tesla explained this: At that time, the Model S was driving on a two-way, centrally isolated road with autopilot in an open mode, when a trailer traversed the road in a direction perpendicular to the Model S. In strong daylight conditions, both the driver and the autonomous driver failed to notice the white body of the trailer, so the brake system could not be activated in time. As the trailer is crossing the road and the body is high, this special condition causes the Model S to collide with the bottom of the trailer when it passes through the bottom of the trailer. Although the official did not give a clear and powerful explanation for the accident. However, as an engineer in the industry, combined with the development of intelligent driving technology and various difficulties encountered, we may wish to make the following inference: On the hardware equipment, the Tesla was equipped with the first generation Autopilot system, namely Front camera from Mobileye, millimeter wave radar from Bosch, and 12 ultrasonic sensors. At the priority level, it is camera-led. Although Tesla official blamed the strong sunshine and the white body, the camera did not see the trailer, causing an accident. But perhaps these are only secondary reasons. The key issue is likely to be: the Mobileye camera used in this generation of systems is more focused on the training of the front and rear, but the training on the side of the car is limited, and the comparison with the trailer is encountered. Special shape. So the camera analyzes from the outline and does not regard it as an obstacle. And because the bottom of the trailer is empty, the millimeter-wave radar does not get a reasonable reflection when scanning. Or it is judged that the trailer in front may be dangerous, but because the camera is dominant, the execution of the millimeter wave radar is not high enough. The two fuzzy judgments are superimposed, and the system decides to “missingâ€, which leads to the accident. Dan Galves, an executive at Mobileye, also indicated after the accident: The current anti-collision technology or automatic emergency braking system is only suitable for the following (follow-up) state and is only designed for problems that follow the car. That is, when the vehicle is driving laterally, the current Autopilot system itself does not have sufficient judgment. Therefore, in September of the same year, Tesla also announced the upgrade of Autopilot technology. The second-generation Autopilot will use the radar instead of the camera as the dominant factor to judge the surrounding conditions through the eight cameras and 12 sensors. At the same time, the settings are also adjusted: if the driver does not hold the steering wheel within a certain period of time, the system will issue an alarm, and if the driver repeatedly ignores the alarm issued by the system, the automatic steering software will automatically stop using. The statement also stated: In order to allow the vehicle to better handle the data collected by the sensor, the vehicle will be equipped with a more powerful computer, which will be 40 times more powerful than the previous generation, and will run a new neural network system developed by Tesla to handle Vision, sonar and radar signals. This system enables a view that the driver can't see, and can view all directions at the same time and at a speed far beyond human perception. In this way, Tesla already has the hardware foundation for autonomous driving. However, this does not mean that Tesla will have full autopilot capability right away. Because for each individual sensor, or for the fusion between different sensors, it takes some time to learn and improve. to sum up Even though many brands are promoting their smart driving skills, they are drawing a bright future for consumers. But as long as the terms "driver can't give up responsibility" still exist, in the event of an accident, the most direct responsibility will be pushed to the driver. Therefore, although the era of fully automatic driving is just around the corner, it is still the best choice not to blindly trust the so-called "autopilot" system.

Surface Mounting Sensor

NTC temperature surface mount sensors are non intrusive type sensors consisting of a probe section designed for mounting to flat or curved surfaces.

Cables can be supplied with insulation or jacket in PVC, Teflon or Silicon. Some cables are also available with a stainless steel over-braid or stainless steel armor for additional protection.

With the properties of easy installation, surface temperature measurement, surface mount type NTC temperature sensor has been used to power supply, radiator, electric motor and generator. Temperature range is from -30°C to 105°C.

Surface Mounting Sensor,Surface Mount Sensor,Sensor Radiator,Surface Mount Ntc Sensor Feyvan Electronics Technology Co., Ltd. , https://www.fv-cable-assembly.com